Added April 16, 2021: This article has been updated to reflect the changes introduced by AWS Signature Version 4 support on Prometheus servers.

We recently announced the Amazon Managed Service for Prometheus (AMP), which allows you to create a fully managed and secure Prometheus-compatible environment for capturing, querying, and storing Prometheus metrics. In a previous AWS Management & Governance blog post, I showed you how to set up an AMP to monitor your containerized environment. In some critical use cases, containerization can be a long way off, and sometimes impossible. This article describes how to use AMP on a system running on Amazon Elastic Compute Cloud (Amazon EC2) or an on-premises environment.

set up

This example is described in the following steps.

The corresponding architecture can be visualized as follows:

In this example, the Ireland (eu-west-1) region is selected. To see which AWS Regions are supported by service, see the AWS Region Services List.

Amazon EC2 setup

The first step in this walkthrough is to set up your EC2 instance. Host the application on that instance and transfer the metrics to the AMP workspace that you will create later. We recommend that you use the IAM role for your instance. You can attach the policy AmazonPrometheusRemoteWriteAccess to this role to give your instance minimal permissions.

Demo application

Once the instance is set up, you can log in to the instance and run the sample application. Create a file named main.go and add the content shown below. Use the Prometheus http handler to automatically publish some system metrics over HTTP. You can use the Prometheus client library to implement your own metrics.

package mainimport ( "github.com/prometheus/client_golang/prometheus/promhttp" "net/http")func main() { http.Handle("/metrics", promhttp.Handler()) http.ListenAndServe(":8000", nil)}Before running the sample application, make sure that all the dependencies are installed.

sudo yum update -ysudo yum install -y golanggo get github.com/prometheus/client_golang/prometheus/promhttpgo run main.go The application must be running on port 8000 . At this stage, you should be able to see all the Prometheus metrics published by your application.

curl -s http://localhost:8000/metrics ...process_max_fds 4096# HELP process_open_fds Number of open file descriptors.# TYPE process_open_fds gaugeprocess_open_fds 10# HELP process_resident_memory_bytes Resident memory size in bytes.# TYPE process_resident_memory_bytes gaugeprocess_resident_memory_bytes 1.0657792e+07# HELP process_start_time_seconds Start time of the process since unix epoch in seconds.# TYPE process_start_time_seconds gaugeprocess_start_time_seconds 1.61131955899e+09# HELP process_virtual_memory_bytes Virtual memory size in bytes.# TYPE process_virtual_memory_bytes gaugeprocess_virtual_memory_bytes 7.77281536e+08# HELP process_virtual_memory_max_bytes Maximum amount of virtual memory available in bytes.# TYPE process_virtual_memory_max_bytes gaugeprocess_virtual_memory_max_bytes -1# HELP promhttp_metric_handler_requests_in_flight Current number of scrapes being served.# TYPE promhttp_metric_handler_requests_in_flight gaugepromhttp_metric_handler_requests_in_flight 1# HELP promhttp_metric_handler_requests_total Total number of scrapes by HTTP status code.# TYPE promhttp_metric_handler_requests_total counterpromhttp_metric_handler_requests_total{code="200"} 1promhttp_metric_handler_requests_total{code="500"} 0promhttp_metric_handler_requests_total{code="503"} 0Create an AMP workspace

To create a workspace, access AMP in the AWS console and enter a name for your workspace.

After creation, AMP will provide a URL for remote write and a URL for querying.

Run the Prometheus server

To install the latest stable version of Prometheus, including the expression browser, see the Prometheus Guide. This example installs Prometheus v2.26.0 on Amazon Linux as follows:

Create a new file named prometheus.yaml and rewrite the remote_write setting with the workspace ID in the AMP workspace in the AWS console. Create a prometheus.yaml with the following content, Replace with the ID of the AMP workspace)

global:scrape_interval: 15sexternal_labels: monitor: 'prometheus'scrape_configs:- job_name: 'prometheus' static_configs:- targets: ['localhost:8000']remote_write:- url: https://aps-workspaces.eu-west-1.amazonaws.com/workspaces//api/v1/remote_write queue_config:max_samples_per_send: 1000max_shards: 200capacity: 2500 sigv4:region: eu-west-1You're finally ready to run Prometheus and send your application metrics to AMP.

prometheus --config.file=prometheus.yamlMetrics are now sent to AMP! You should see the output in the console of the Prometheus server.

Visualize metrics with Grafana

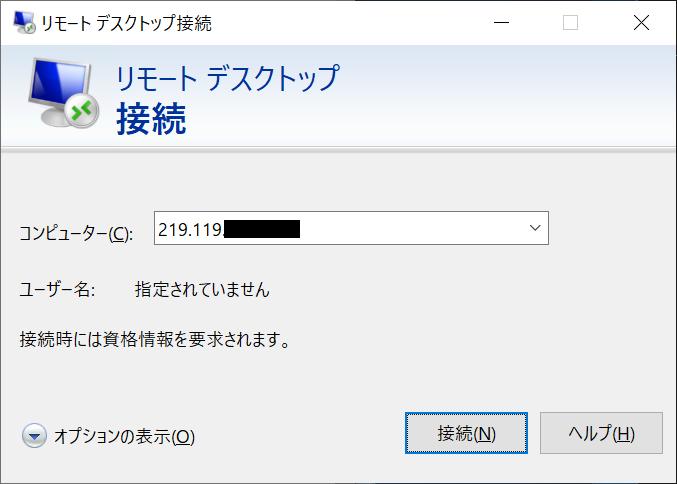

Grafana is a popular platform for visualizing Prometheus metrics. On your local machine, install Grafana and set the AMP workspace as your data source. Make sure your local environment has the following or higher permissions to query the workspace: Please have an Access Key ID and Secret Access Key available in your local environment with the required permissions.

{ "Version": "2012-10-17", "Statement": [{"Action": [ "aps:GetLabels", "aps:GetMetricMetadata", "aps:GetSeries", "aps:QueryMetrics", "aps:DescribeWorkspace"],"Effect": "Allow","Resource": "*"} ]}Enable AWS Sigv4 authentication before running Grafana to sign queries to AMP with IAM permissions.

export AWS_SDK_LOAD_CONFIG=trueexport GF_AUTH_SIGV4_AUTH_ENABLED=truegrafana-server --config=/usr/local/etc/grafana/grafana.ini \--homepath /usr/local/share/grafana \cfg:default.paths.logs=/usr/local/var/log/grafana \cfg:default.paths.data=/usr/local/var/lib/grafana \cfg:default.paths.plugins=/usr/local/var/lib/grafana/plugin Log in to Grafana and go to the datasource settings page / datasources to add the AMP workspace as a datasource. Do not add /api/v1/query to the end of the URL. Please enable Sigv4 auth Authentication Provider select the appropriate region and SigV4 Auth Details it.

Application metrics are now available. For example, try setting scrape_duration_seconds{} in the Metrics Browser. You should see a screen like the one below.

summary

In this article, we used the recently announced Amazon Managed Service for Prometheus to elaborate on setting up an architecture for metric collection in a non-containerized environment with Amazon EC2 instances. You can preconfigure an AMI with all relevant dependencies or use AWS Systems Manager to automate the collection of metrics on your EC2 instance. See links for Amazon Managed Service for Prometheus, Amazon Managed Service for Grafana, and OpenTelemetry.

Rodrigue Koffi

Rodrigue is a Solutions Architect for Amazon Web Services. He is passionate about distributed systems, observerability and machine learning. He has extensive experience in DevOps and software development and likes programming in the Go language. His Twitter ID is @ bonclay7.

The translation was done by Solutions Architect Fujishima. The original text is here. In translating this article, some screen captures have been replaced with the latest version at the time of translation, and some descriptions have been added.

![EVsmart blog Toyota's electric car "bZ4X" that makes you feel comfortable with electric cars and quick chargers / No% display of battery level [Editorial department] Popular articles Recent posts Category](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/9/752542064665dc2bd7addbc87a655694_0.jpeg)

![Lenovo's 8.8 inch one-handed tab "Legion Y700" full specs released! [Is the price in the 40,000 yen range?]](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/9/207e1be231154e91f34c85b4b1d2126c_0.jpeg)